As Google continues to explore its re-entry into China, it seems to be looking at creativity and entertainment as new planks of engagement with Chinese users. Over the last few months, Google has been making inroads into the Chinese gaming space in particular: it invested 500 million RMB (~ 78 million USD) into Chinese game streaming platform Chushou TV in December, and in January announced a patent agreement with Tencent, the Chinese tech giant with a particular strength in online gaming.

Google also recently opened an AI research lab in Beijing, and last month held a conference in the Chinese capital — where key properties such as YouTube are still blocked — exploring the application of AI to various fields, including gaming and music production.

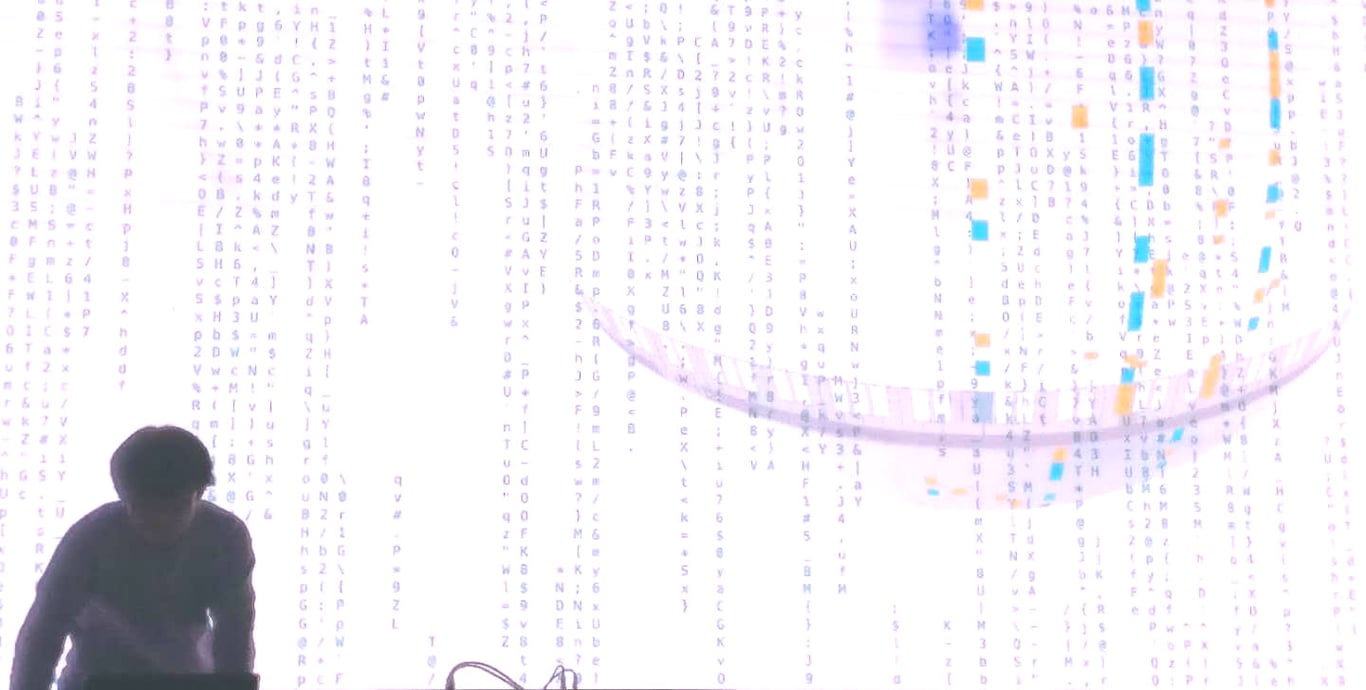

Musical composition and performance are interesting and somewhat counterintuitive fields in which Google is pushing AI development. One presentation at last month’s conference focused on Magenta, a research project that enlists Google’s TensorFlow machine learning platform in “generating songs, images, drawings, and other materials.”

Another was given by Hangzhou-based musician, coder, and educator Wang Changcun, who has been pregaming a machine-friendly musical future since long before Magenta came along: his 2012 album Homepage was entirely generated from custom Javascript patches he programmed and inserted into Google Chrome, and his 2017 live album The Reality You Are Trying To Visit No Longer Exists treats “internet surfing” as a key performative act.

I caught up with Wang after his Google performance to ask a few questions about AI Duet — the software instrument created by Google, which Wang demonstrated — and his general thoughts on how and if an autonomous AI might ultimately generate truly creative music, and if so, how we’d even know:

Can you give a short description of your technical background? I know that you’ve used code in your music-making process for quite some time, including a JS-based composition for Chrome and custom-built Max/MSP patches/plugins…

Wang Changcun: My major in college was electronic information engineering. After graduating I worked in web development, actionscript development, and have spent several years working in art institutions.

What interests you about making code-based music?

I like the idea of using the simplest possible means to make music, and sometimes the simplest way to hear a piece of music is to use MaxMSP or code to make it. The thing about coding that particularly attracts me is that it allows you to open up more complex possibilities.

You recently performed on an instrument Google is developing called AI Duet. Can you describe how it works in basic terms?

AI Duet basically feeds back more notes on top of the notes you play, based on a lot of data and Google’s AI algorithm.

What was your experience using Google’s AI Duet instrument?

There were a few surprises, such as the fact that the Chrome browser can directly receive MIDI signals through webmidi, allowing the browser to really be used as a musical instrument in a more convenient way than I previously thought.

Did you learn anything interesting at the Google event?

Of course — this is the first time I’ve collaborated with a tech company like Google. Watching other people’s talks at the event, I got to better understand how people in other fields view AI technology. This was quite fascinating.

Do you think AI will ultimately be able to create music or art at the same level of sophistication as humans? Or will there always be a fundamental difference between “human art” and “AI art”? How will we know?

I really look forward to the point when AI can spontaneously create music, but it will not at all be what we consider to be “human music.” At least, it won’t be an imitation of human music. Any creations by an AI should be considered a new thing, not a duplication or extension of humanity.

Do you have any other thoughts about machine learning/AI in the field of music?

How to say it… I guess the successful application of AI in the field of business may not be applicable to the field of music. Of course, there’s massive space for the development of AI in every field, but not necessarily in accordance with the current direction of everyone’s imagination…

—

Find Wang Changcun’s previous compositions with Chrome-as-instrument (and other esoteric experiments) here, and check out other artists in the same vein via the label he co-founded, play rec.